AR + AI: Evolution from Tool to “Second Brain”

Dr. Qiu Xiaoling, CFO of JBD, has recently delivered a penetrating analysis of the MicroLED microdisplay and AR glasses ecosystem, illuminating how these once-independent frontiers are coalescing to redefine wearable AI. As AI evolves from merely being “smarter” toward truly “more attuned to you,” users increasingly expect an always-on digital assistant. AR glasses have emerged as the quintessential conduit for frictionless, context-aware interaction, and the ultra-compact MicroLED microdisplay has become the linchpin technology that turns the vision of lightweight, all-day wearable intelligence into daily reality.

In today’s era of breakneck technological progress, one will soon no longer need to hunch over a smartphone. Instead, a lightweight pair of glasses will deliver real‑time information, translation, environmental awareness, reasoning, and even anticipate one’s needs. This is no sci‑fi fantasy, but a tangible outcome of the convergence of AI and AR.

The fusion of AI and AR offers consumers a far more natural, convenient means of interaction and is catalyzing a revolution in smart wearable electronics. Meta’s CTO Andrew Bosworth has remarked, “The always‑on AI experience will allow smart glasses to replace the smartphone.” [1] The future is rapidly approaching.

1. AI Foundation Models: Democratization and Personalized Intelligence

Large reasoning models have imbued AI with the ability to “think like a human”: beyond parsing surface text, they grasp context and logic, generating responses that mirror human cognition. This breakthrough has dramatically elevated baseline AI capability and is already proving invaluable in daily work.

DeepSeek is a striking example. By optimizing both algorithms and system architecture, it markedly boosts the pre‑training efficiency and the inference speed, slashing development time and cost. As a result, AI applications can now run on a far wider array of devices—including resource‑constrained mobile hardware—truly democratizing the technology.

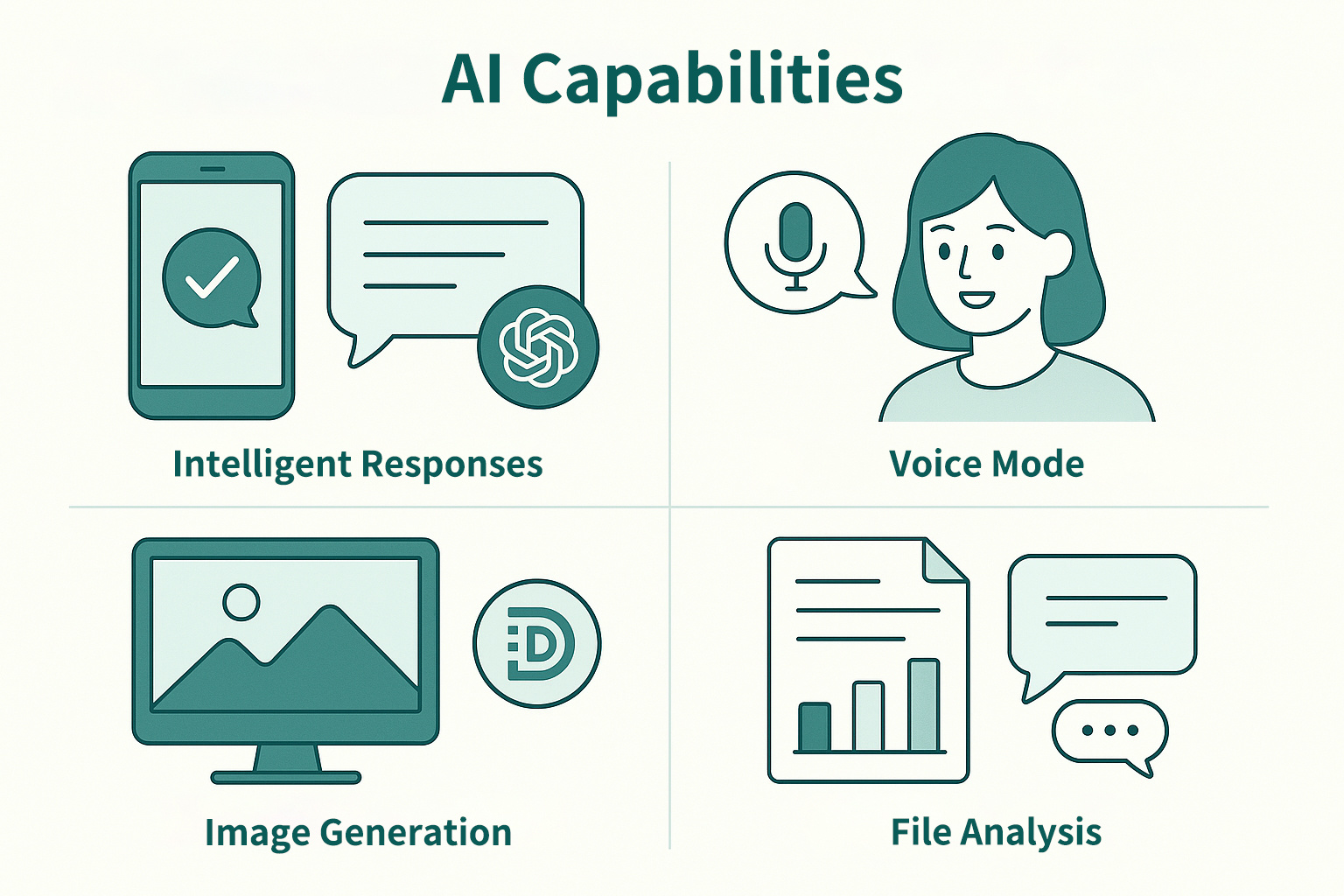

AI Capabilities

As AI grows ever more powerful, the paramount question becomes how to harness it efficiently and effortlessly. Every user’s needs differ—text, images, video, code generation, and more. Multimodal foundation models that fuse diverse data types can deliver bespoke services to each user and satisfy that diversity of use cases.

Hence AI is evolving from “smarter” to “more attuned to you.” By continually analyzing behavioral data, AI systems perpetually refine performance and service, realizing genuine personalized intelligence and penetrating ever broader, deeper domains.

2. The All‑Day AI Assistant: From “Omnipotent” to “Omnipresent”

Input and output are equally crucial in AI interaction. Input is what you ask AI to analyze; output is the result—text, audio, images, or video. Today the PC remains the device through which most people engage AI, yet it is hardly the most natural interface.

An all‑day AI assistant supplies information and interaction anytime, anywhere, without reliance on a phone or PC. Voice mode in tools such as ChatGPT is now ubiquitous, illustrating that AI’s flexibility should no longer be constrained by hardware form factor.

Chest‑pin or handheld products such as AI Pin and Rabbit R1 pursued greater flexibility, but market response was lukewarm. Beyond physical‑design shortcomings, a decisive factor was the lack of interaction convenience.

As AI grows “omnipotent,” the ideal paradigm is to issue commands and receive answers anytime, anywhere—for example, consulting a recipe or setting a virtual timer while your hands are busy in the kitchen. That demands an “always‑on” device that senses your needs.

Rokid Glasses – Payment

While true‑wireless‑stereo (TWS) earbuds are popular, smart glasses clearly win on long‑wear comfort and functional integration. They also furnish AI with a visual display—enabling multimodal interaction no earbud can match. Cameras, microphones, and other sensors supply far richer data than a smartphone, fortifying support for multimodal AI. As Mark Zuckerberg notes, “Glasses are uniquely positioned to let people see what you see and hear what you hear.” [2]

3. AR + AI: Redrawing the Frontiers of Human‑Machine Collaboration

AI turns AR glasses into a personal assistant—and, ultimately, a “second brain.” They can recommend books or films based on your history and preferences. The Verge wrote of Android XR prototypes: “For that hour I felt like Tony Stark, and Gemini was my J.A.R.V.I.S.” [3]

The combination of AI and AR’s visual display lets devices even anticipate needs: reminding you of your hotel‑room number, translating a foreign menu, or highlighting the next tool during furniture assembly.

To make AR glasses the optimal AI carrier, however, designers must overcome the engineering hurdle of true all‑day ergonomics. Optical architecture drives form factor: many LCoS, DLP, or BirdBath solutions are simply too bulky for stylish everyday wear.

Even G1 A [4]

MicroLED micro‑displays are pivotal. Their tiny volume, high brightness, and low power draw enable lightweight AR designs. The smallest engine now measures 0.15 cm³ and weighs just 0.3 g. Powered by JBD’s MicroLED displays, Vuzix Z100 and OPPO Air Glass 2 look little different from conventional eyewear and weigh barely thirty grams—perfect for all‑day wear.

Extreme miniaturization is driving AR into mainstream view, while rapid AI iteration, always‑on connectivity, and seamless interaction embed smart glasses ever more deeply into daily life.

AR + AI is reshaping human‑world connectivity, ushering in the next generation of interaction, and evolving into a genuine “second brain” that promises unparalleled convenience and innovation.

AR, when fused with AI, is not merely redefining how we connect with the world; it is also forging a new stratum of human‑machine symbiosis and fast maturing into humanity’s “second brain.” By infusing everyday life with unparalleled convenience and disruptive innovation, this convergence propels us toward a future that is immeasurably smarter—and profoundly brighter.

References

[1]. https://www.thesun.co.uk/tech/30695475/meta-orion-glasses-boz-andrew-bosworth-interview-ai

[2]. https://www.theverge.com/24253481/meta-ceo-mark-zuckerberg-ar-glasses-orion-ray-bans-ai-decoder-interview

[3]. https://www.theverge.com/2024/12/12/24319528/google-android-xr-samsung-project-moohan-smart-glasses

[4]. https://www.evenrealities.com/products/g1-a

Display

Display